Introduction: enthusiasm for AI and the practical challenge#

Let’s be honest: if someone tells you that AI is the “future”, put it on the list of news after midnight. AI is already here, taking selfies with our data and whispering suggestions in support tickets. The good news: you don’t need to reinvent your entire Java architecture to take advantage of it. Spring AI is the elegant bridge that connects your APIs, databases, and AI models without forcing you to redo the backend.

What is AI and why does it matter now#

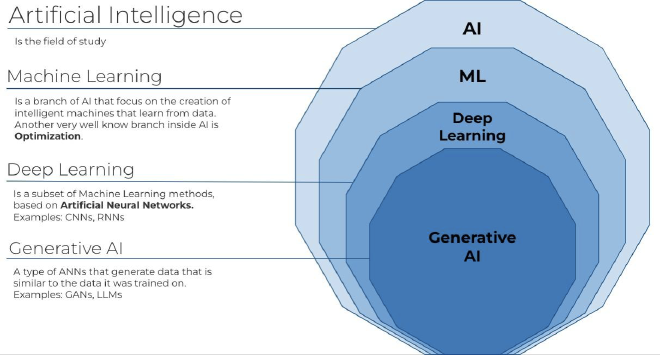

In a nutshell: AI is enabling machines to perform tasks associated with human intelligence — understand, predict, generate — thanks to technologies that have recently aligned:

- Machine Learning (ML): learning from data instead of coding fixed rules. Fewer if-else, more statistics.

- Deep Learning (DL): deep networks that excel in vision, voice, and text.

- Transformers and LLMs: the Transformer architecture gave rise to large language models that not only classify but generate coherent text.

- Hardware and runtimes: GPUs and optimizations (ONNX, TensorRT, JVM runtimes) that have turned processes of weeks into hours or days.

Key concepts (brief guide for developers)#

- ML: fraud detection, recommenders, classification.

- DL: deep networks for representation and perceptual tasks.

- Generative AI and LLMs: text generation, code, summaries, and documentation.

- Hardware/runtime: GPUs for training; ONNX and runtimes for efficient inference, even from the JVM.

Java and AI: myths and realities#

Myth: “Java isn’t for AI”. Reality: it’s not the first choice for training models from scratch, but it’s excellent for integrating, orchestrating, and executing inference in production.

- Java in AI: Apache Spark and DeepLearning4J are used in production for data preparation and models.

- JVM improvements: Project Panama and the Vector API optimize numerical operations and access to native libraries — very useful for inference.

- Practical path: many apps consume models via APIs (OpenAI, Anthropic, Azure, Google) from Java.

Moral: Java participates, and it does it well.

What is Spring AI#

Spring AI applies Spring principles to the AI world: portability, decoupling, beans, and clear contracts. Result: you plug in different providers without redoing business logic.

Key features#

- Multi-provider support: OpenAI, Azure OpenAI, Amazon Bedrock, Google, Anthropic, Ollama, and more.

- Portable API: interfaces like ChatClient and EmbeddingModel to change provider with minimal changes.

- Structured outputs: map responses directly to POJOs and avoid fragile parsings.

- Function Calling / Tools: the model can request that the app execute code and then resume the result.

- Chat Memory: maintain context between interactions for coherent conversations.

- Integration with vector stores: RAG (Retrieval Augmented Generation) so the model can query your documents.

Design and philosophy: interfaces and decoupling#

At the heart is the contract: ChatModel / ChatClient. The advantage is that your logic uses stable interfaces and forgets provider details. Switching OpenAI to Anthropic is usually configuration, not rewriting. It facilitates testing (mock ChatClient) and streaming responses for more fluid interfaces.

Practical use cases and demos you can try#

- Basic chat + prompt engineering

- Define a System Role to guide the model.

- Use ChatClient to handle structured conversations.

- Conversation memory

- Save the essentials with Advisors (e.g., MessageChatMemoryAdvisor) so the model remembers what’s relevant.

- Structured outputs

- Map responses to POJOs with StructuredOutputConverter or BeanOutputConverter: fewer regex, more type-safe.

- Function calling

- Allow the model to request execution of a Java function (check inventory, get date) and re-inject the result.

- RAG (Retrieval Augmented Generation)

- Index documents in Milvus, Pinecone, or similar, retrieve chunks with embeddings, and enrich the LLM with concrete evidence.

Example pseudocode#

ChatClient chatClient = applicationContext.getBean(ChatClient.class);

ChatRequest req = ChatRequest.fromMessages(

system("You are an expert billing assistant"),

user("What is the status of invoice 12345?")

);

ChatResponse resp = chatClient.chat(req);

InvoiceStatus status = resp.getStructuredOutput(InvoiceStatus.class);Integration into the enterprise ecosystem#

Spring AI doesn’t replace your ERP or IT department; it connects it with additional intelligence. Practical patterns:

- Isolate business logic behind the portable API.

- Implement caching and rate limits when consuming external APIs.

- Use RAG for traceable and verifiable responses.

- Filter sensitive data before sending to the cloud, or use local models if policy requires it.

Best practices#

- Define the purpose with a good system prompt.

- Save memory judiciously: relevant, summarized, and with consent when applicable.

- Prefer structured outputs when possible.

- Design fallbacks: normalize UX if LLM call fails.

- Monitor costs and latency: LLMs cost and aren’t always the best solution.

- Version and test prompts like code.

Conclusions and next steps#

- Spring AI makes it easy to integrate AI into Java apps by leveraging Spring patterns.

- With portable APIs, structured outputs, function calling, chat memory, and vector store support, you have the pieces for advanced cases like RAG.

- Java + Spring AI is a practical combination: the JVM provides infrastructure and Spring AI connects to cutting-edge models.

Hands-on suggestions#

- Review the official Spring AI documentation and follow a tutorial to configure ChatClient.

- Try an RAG case: index documents, create embeddings, and add semantic search.

- If you want to run locally: explore ONNX and JVM runtimes; for massive data, look at Apache Spark and DeepLearning4J.

If you’ve made it this far —wow, you’re officially my favorite— I can prepare a step-by-step example: a starter Spring Boot + Spring AI project that shows basic chat, structured outputs, and an RAG integration ready to run. Want me to build it? Tell me which provider you prefer (or if you want everything local) and I’ll get to work. 😉